Retention strategies for subscription companies in 2026

.webp)

The subscription economy is moving into a more selective era. Even as the market reached an estimated $1.5T in 2025, consumers are auditing what they keep, what they cancel, and what they consider “worth it.” For subscription brands, growth now depends less on adding volume at the top of the funnel and more on increasing the percentage of subscribers who reach value quickly, stay active longer, and renew with confidence.

Retention has always mattered in subscriptions, but what has changed now is the margin for error. In times where acquisition has become more expensive and subscribers are more willing to cut services, lifecycle performance has become the difference between steady compounding growth and constant replacement.

Ever-growing subscription fatigue

Subscription fatigue has become a persistent constraint on growth. One dataset puts it at 41% of consumers experiencing subscription fatigue. According to the 2025 Self Financial survey, the average U.S. household dropped from 4.1 subscriptions to 2.8 in a year, and CivicScience reports that 31% of streaming subscribers cancelled at least one service in 2025.

This changes the baseline assumption behind many lifecycle programs. Subscribers are not defaulting to “try one more.” They are actively filtering for subscriptions that deliver ongoing value.

Curse of generic messaging

Generic win-back and re-engagement sequences have been overused across the subscription economy. Many subscribers recognize these patterns instantly, especially when the message does not reflect their behavior or the reason they disengaged. One-size onboarding has the same problem. A single generic welcome path cannot serve the different ways subscribers arrive, explore, and reach their first meaningful outcome.

Retention programs are also losing effectiveness when value communication only appears near renewal. Renewal reminders work best when they reinforce a value story that has been built consistently throughout the lifecycle.

Subscription retention strategies in 2026

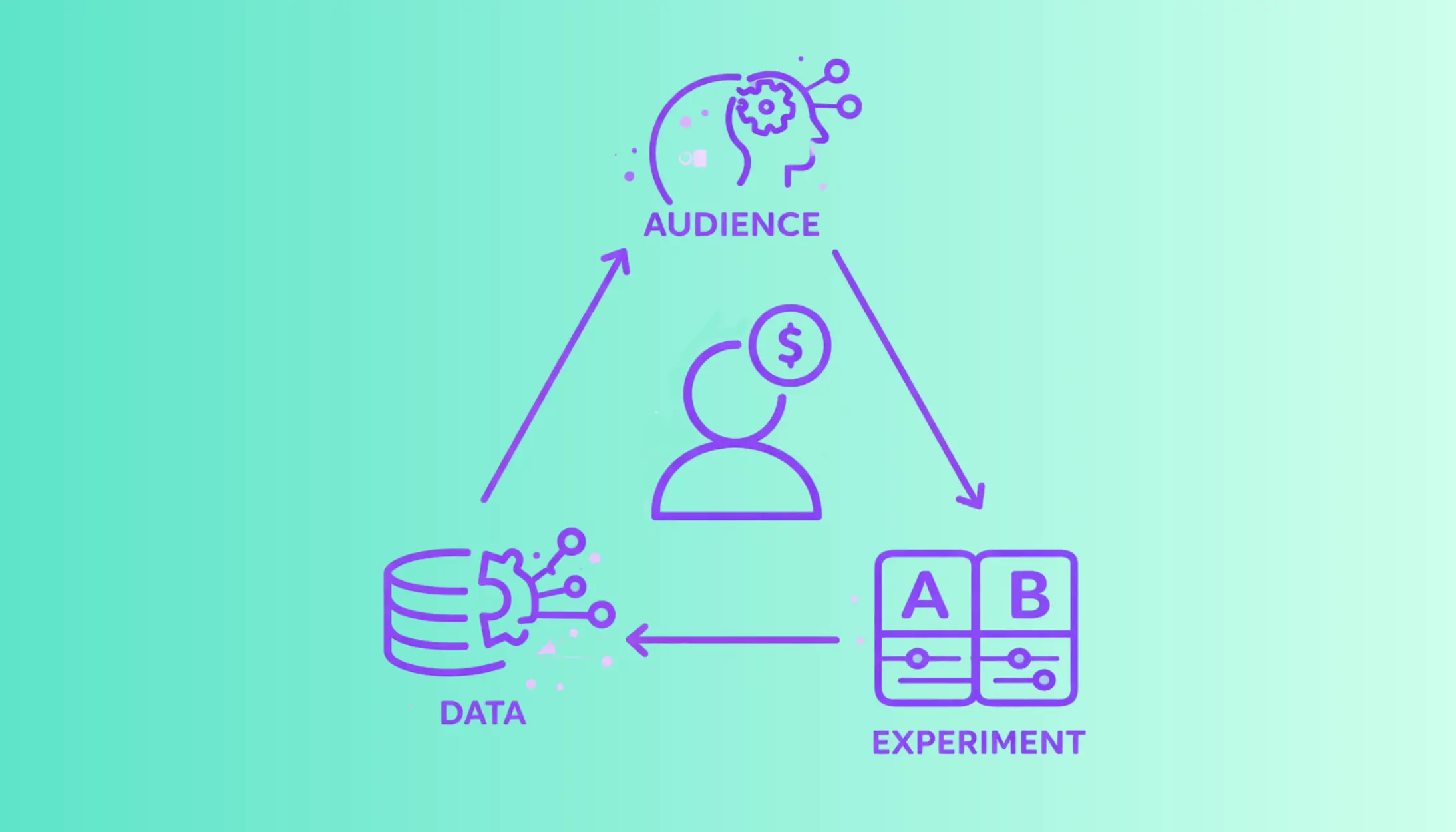

1. Behavioral audiences that refresh automatically

Subscriber outcomes are better predicted by behavior than by static attributes. The teams seeing consistent lift build audiences around observable usage patterns and subscription signals, then refresh those audiences continuously as behavior changes. That looks like identifying trialists who have not activated, paid subscribers whose engagement is decaying, subscribers showing downgrade intent, and renewal-risk cohorts whose activity trend is shifting.

The measurable upside comes from pairing the audience with a focused intervention and tracking lift against a control group. In practice, this is how subscription teams produce outcomes such as trial rescue targeting low-engagement signals and retention lift; downgrade/save paths for declining users that materially improve retention; and re-engagement programs that increase both retention and engagement depth. Our customers experience retention lift for well-targeted re-engagement, and significantly higher lift for timely save and downgrade interventions because the audience definition and the timing are precise rather than generic. The results speak for themselves:

- Trial rescue targeting low-engagement signals resulted in a 7.1% retention lift

- Smart downgrades for declining users led to a 23.9% retention improvement

- Re-engagement campaigns also lifted retention by 4.1%, as well as an increase in pageviews by 54%

2. Experimentation volume that produces patterns

Retention lift becomes more reliable when teams can run enough experiments to identify patterns. This helps avoid the spray-and-pray strategy that usually results in one-off wins. When a team can only run a handful of tests per quarter, learnings are slow and results are hard to generalize. When teams can run multiple concurrent experiments across onboarding, engagement, renewal, pricing, and win-back, they start to see repeatable mechanisms that they can roll out across cohorts.

Daily Mail’s experimentation cadence and outcomes are an example of a retention program that is treated as an operating system rather than a campaign calendar. With a 30-day engagement boost by +14% and an increase in CLV by +32%, the team at Daily Mail was able to utilize AI-generated behavioral audiences, create experiments, and measure the impact across engagement, retention, and CLV metrics.

3. Timing that matches the subscriber’s decision window

The subscriber churn decision forms when value feels less visible, or the product stops fitting the moment. The lifecycle programs that perform best in 2026 don’t focus on calendar dates and intervene with changes in signals.

Practical examples of signal-based timing include:

- Trial rescue when early engagement drops below a threshold, supported by targeted onboarding or feature/value prompts.

- Save paths when downgrade or cancellation intent appears, paired with plan flexibility or value framing, while the subscriber is still deciding.

- Engagement reinforcement when usage decays, using content, feature highlights, or benefit reminders aligned to what the subscriber already cares about.

- Temporary access or benefit adjustments when behavior suggests the subscriber is drifting, paired with a clear reason to re-engage.

The core idea here is consistency with context. Triggers should reflect behavior and subscription context, and the timing should match when the intervention can still change the outcome.

4. Automation that enables always-on journeys

Consider a lifecycle scenario in which a test is performed, the team documents it, and then they move on with no learning being taken forward unless the test is manually rebuilt for the next cohort.

Automation cannot continue to be a way to send more messages. It needs to become a mechanism for consistency and scale.

In 2026, strong programs promote winning interventions into always-on journeys. That means the same audience definition continues to update, new qualifying subscribers enter automatically, and lift continues to be measured.

5. Data connectivity across the tech stack

Retention rarely fails inside a single system. It breaks down in the gaps between them. Usage data lives in product analytics, subscription status in billing, customer context in the CRM, and messaging performance in the ESP; however, without alignment, none of them tells the full story. If these systems do not work together cleanly, lifecycle programs become delayed and generic because teams cannot react to the full picture, and they require heavy involvement of engineers to get any sort of experimentation live.

The subscription teams that scale retention automation unify the important signals such as:

- Billing and subscription events, including renewals, failures, pauses, downgrades, upgrades

- Product usage signals such as frequency, depth, and feature adoption

- Messaging engagement across email and other channels

- Support and service signals where relevant

This unified view is what enables real-time audience refresh, reliable triggers, clean experimentation, and measurement tied to retention and revenue outcomes.

Subscription metrics that matter

Subscription teams still track open rates and clicks, but those metrics are supporting signals. The metrics that guide decisions now are the ones tied to lifecycle outcomes, such as:

- Retention lift and churn score measured against control groups over meaningful windows

- Engagement momentum, including trajectory in frequency and depth, rather than a single point-in-time session

- Revenue protection and expansion impact, including saved subscriptions, downgrade prevention, upgrade lift, and ARPU changes

- LTV and cohort durability, so lifecycle work maps to the long-term value of the base

If you can measure these reliably, you can scale with confidence. If you cannot, you end up with activity without clarity.

Building a retention program in 2026

The highest-performing retention programs share a simple operating rhythm.

They define lifecycle audiences based on behavior and subscription context using predictive AI, and those audiences refresh automatically. They run controlled experiments continuously across multiple lifecycle stages. They measure lift in retention, revenue, and engagement depth against a holdout group. They promote winners into always-on journeys so the baseline experience keeps improving without constant rebuilding.

Over time, the program becomes harder to copy because it compounds learnings into the lifecycle itself.

Closing remarks

Retention in 2026 is shaped by discipline. The teams that win are not sending more campaigns. They develop a system that learns, proves lift, and scales what works across the lifecycle.

That is exactly where Subsets fits. Subsets helps subscription teams identify high-impact audiences from lifecycle signals, run statistically sound experiments with control groups, measure retention and revenue lift over time, and turn winning interventions into always-on journeys. If you want to build a retention program that compounds rather than resets every quarter, book a demo with our team.

.svg)

.svg)

.webp)