State of lifecycle experimentation in 2025

.webp)

In 2025, AI has become a baseline feature across MarTech platforms. Subject lines update in real time, customer journeys respond to behavioral inputs, and predictive models support decision-making at every stage. But while automation has scaled, the most consistent retention gains come from teams that build experimentation into the structure of their lifecycle strategy.

According to Snowflake’s "Modern Marketing Data Stack" report, teams that combine predictive segmentation with structured experimentation achieve 27% higher retention impact compared to those relying on personalization alone. This shift demands more than reactive automation and requires systems that can test and adapt continuously, where every interaction is measurable, and every insight informs the next step.

Era of dynamic systems

Traditional marketing worked in cycles: seasonal pushes, one-off flows, fixed winback strategies. Today, lifecycle experimentation enables continuous systems. Teams design onboarding paths that adapt to user behavior, test messaging cadence to balance value and fatigue, and refine upgrade prompts using live engagement signals.

Retention strategy is now shaped through iterations, with no space for assumptions in the strategy.

Leading marketers deploy predictive modeling, suppression logic, and dynamic creative testing to increase lifetime value while reducing manual cycles. Experimentation has moved from a lever to the infrastructure behind sustained growth, with each test strengthening key business outcomes, i.e., trial-to-paid conversion, upsell velocity, average session depth, and net revenue retention.

The role of AI in experimentation

AI expands how and when teams can experiment. It enables faster feedback loops, operationalizes strategy, and strengthens precision. AI’s role in optimizing message timing, adjusting lifecycle touchpoints, and detecting fatigue before performance suffers is of critical value. According to WSJ, Taco Bell and KFC were able to drive measurable increases in order frequency and ticket size due to AI-led personalization.

Yet AI alone is not enough. Teams that see the most value focus on the full system by framing strong hypotheses, enforcing control groups, tracking long-term impact, and applying learnings across lifecycle stages and for different segments. In fact, Anthony Thompson of PaystubHero points out, “AI only creates real business impact when it’s tested, measured, and iterated on. Without that discipline, it’s just noise dressed up as progress.

What high-performing teams measure

Retention experimentation is anchored to business KPIs. Each lifecycle stage has its own set of performance signals:

- Activation: time-to-value, setup completion, trial-to-paid conversion

- Engagement: session depth, open rates over time, fatigue indicators

- Monetization: upsell rate, churned MRR saved, upgrade/downgrade ratios

- Reactivation: winback success, dormant user re-engagement, campaign-level ROAS

As each test becomes a long-term asset, every outcome tightens the connection between lifecycle operations and business results.

After running more than 200 experiments, we have categorized a few common lifecycle experiments that result in wins powered by structured testing:

- Onboarding optimization: One publisher tested targeted content exposure during the trial phase for subscribers with low engagement. The result was a 5.6% increase in retention by showing content the user had previously enjoyed and prompting preference selection.

- Smart downgrades: For subscribers showing signs of churn, a controlled test of flexible downgrade options led to a 23.9% lift in retention, highlighting the value of offering graceful exits over abrupt cancellations.

- Ad load suppression: In one experiment, users received a time-bound offer to see fewer ads. This small reduction in friction resulted in a 36% increase in visits with +1,000 seconds in session time and a 23% lift in pageviews.

- Content personalization: A segmentation experiment targeting declining engagement with personalized content recommendations led to a 14% lift in unique visits and a 13% improvement in breadth of content consumed.

Guardrails make scaling sustainable

According to Twilio’s CDP Report, 67% of marketers name data accuracy and governance as their top challenge in AI-powered campaigns. Experimentation frameworks that track long-term performance, log variable impact, and embed human oversight have become essential.

But as AI generates more messaging and triggers, trust risks rise. Fatigue, misalignment, and over-delivery can compromise long-term engagement. Leading teams apply clear guardrails and define governance structures that protect brand equity and user experience. These include rules for testing frequency, automated alerts for underperformance, and clear ownership over experiment documentation. The goal is compliance that gives confidence in the outputs of automated systems.

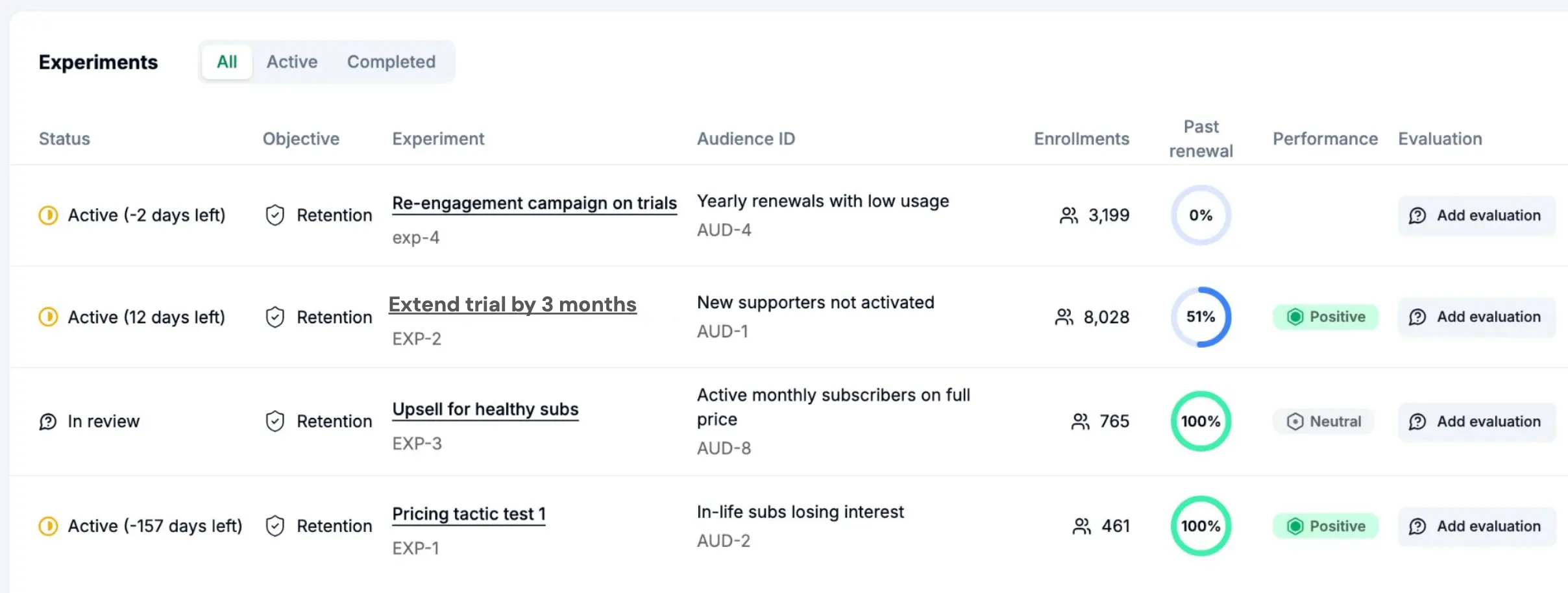

Where Subsets fits the picture

Subsets was built to make structured experimentation operational across the marketing lifecycle:

- Segment audiences by intent, behavior decay, or engagement thresholds

- Design and run controlled a/b tests with pre-set variables and clear success metrics

- Roll winning test variants directly into live journeys and create an always-on retention engine

Closing insight

Experimentation is the foundation for lifecycle marketing in 2025.

The highest-performing teams build systems that learn faster than their competitors. They measure across stages, optimize touchpoints, and apply insight at scale. Retention becomes less about effort and more about compounding returns through structured, measurable iteration.

If you want to build a lifecycle experimentation engine that gives your team the real retention advantage and is built to last, then book a demo with our team today.

.svg)

.svg)

.webp)

.webp)