Predictive models for closing feedback loop in retention experimentation

.webp)

Retention experimentation has evolved from reactive measurement to predictive optimization. Today, businesses are no longer satisfied with knowing what worked. Commercial teams now need to understand why, for whom, and what to do next for building successful retention engines.

This is where predictive models come in. They turn raw lifecycle data, usage signals, purchase frequency, and engagement patterns, etc., into structured intelligence that helps teams test, learn, and automate retention strategies at scale.

Predictive models are the heart of modern retention

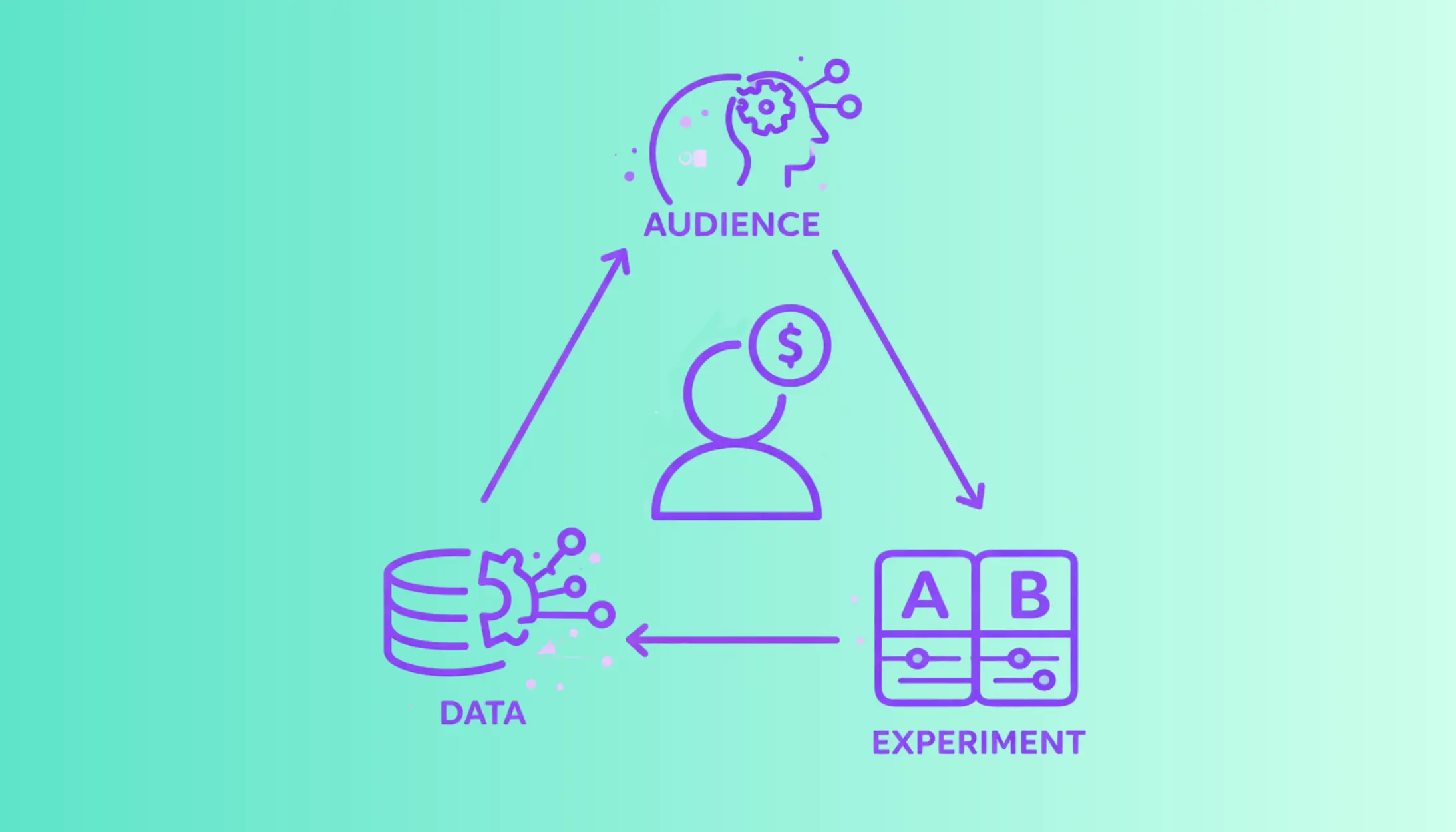

For consumer subscription companies, predictive models power the feedback loop between experimentation and automation. They ensure that every retention initiative, whether it is a save offer, an upsell path, or a reactivation sequence, gets smarter with each cycle.

Closing the loop

Most lifecycle programs operate linearly, where a campaign is launched, short-term results are measured, and then the whole cycle is repeated for another segment of subscribers. Predictive modeling closes that loop by continuously feeding outcomes from experiments back into the system. This helps teams refine audience predictions, improve segmentation logic, and design stronger interventions.

A traditional A/B test can tell you which variant performed better. Predictive models tell you why that result happened and which users are most likely to respond next time. By integrating model outputs directly into experimentation tools, retention programs shift from “set and analyze” to “learn and act.”

How predictive models work in the retention lifecycle

Predictive models ingest historical engagement data, such as logins, purchases, or content interactions, and assign probabilities to key outcomes like churn, upgrade, or reactivation. Each model represents a hypothesis in numeric form, e.g., Subscribers with high feature usage but low purchase frequency have a 64% chance of churning within 30 days.

Lifecycle experimentation then validates or refines these probabilities. The feedback loop works like this:

- Model prediction: Get raw data from the tech stack and identify at-risk, upgrade-ready, or dormant cohorts.

- Experimentation: Run controlled retention experiments for each cohort.

- Outcome capture: Measure lift, retention rate, and engagement changes.

- Model refinement: Feed results back into the model to improve accuracy.

- Automation: Deploy the updated strategy to new, similar audiences.

Each cycle sharpens precision such that the model becomes the foundation for every retention decision.

Building predictive models that drive business outcomes

Predictive modeling is about identifying the signals that matter most. Effective retention models prioritize clarity. Three pillars define a useful predictive model:

- Data quality and recency: Retention insights are only as good as the data behind them. Transactional logs, session history, and support interactions must update continuously so that churn risk predictions reflect real-time behavior. A delayed signal means a missed save opportunity.

- Interpretability: For commercial teams, a model must explain itself. “Explainable AI” or interpretable models surface the why behind the prediction, such as “decrease in premium content views” or “multiple billing pause attempts”. These explanations help teams design targeted experiments rooted in actual subscriber behavior.

- Connection to experimentation: A model has little value if it sits outside the marketing workflow. The most effective systems integrate predictive modeling into lifecycle experimentation tools, automatically generating audience segments, confidence intervals, and retention curves.

Predictive audiences as the new segmentation standard

Traditional segmentation relies on static traits like geography or subscription tier. Predictive segmentation uses real-time probabilities. Instead of labeling a user as “active,” the model might label them as “likely to downgrade within 14 days”.

This approach changes how teams plan campaigns:

- Proactive save paths: Trigger flexible plan offers before usage drops below a defined threshold.

- Smart upsells: Identify high-intent users showing increased engagement with premium features.

- Dormant recovery: Prioritize reactivation flows for users whose inactivity mirrors historical churn patterns.

Predictive audiences ensures retention efforts are focused where they can make a measurable impact.

Designing experiments with predictive precision

Every retention test should start with a prediction: What do we expect to happen, and with whom? Predictive models make that expectation explicit. They provide a forecast baseline that experiments can validate or disprove.

For example, a model predicts a 20% churn risk among users inactive for 15 days. The team runs an email or push campaign with a loyalty incentive. After the experiment, results show a 12% reduction in churn versus control. The model updates its parameters to reflect new behavior, improving future accuracy.

This disciplined loop ensures that each test adds structural value instead of producing isolated insights.

Measuring success from prediction to impact

Predictive modeling improves not just targeting but also measurement. Instead of stopping at open or click rates, teams can quantify real business impact tied to predictions.

- Conversion: Track how predictive targeting improves trial-to-paid upgrades compared to random outreach.

- Retention lift: Measure whether predicted at-risk segments that received interventions retained longer than the control groups.

- Revenue impact: Quantify protected MRR from saved users or incremental revenue from upsells that were predicted to convert.

- Engagement depth: Analyze how personalized interventions influence session time, feature breadth, or purchase frequency.

By aligning model performance with retention metrics, teams can justify investment and improve forecasting accuracy.

Integration into automation systems

The feedback loop closes when experiment results directly influence live journeys. If a downgrade save path consistently delivers a 10% retention lift, the system should automatically promote it to an always-on journey for all similar profiles. Predictive models make this scalable by identifying when a user newly fits that “high-risk” profile and enrolling them into the winning flow automatically.

Automation built on predictive intelligence ensures that every proven result compounds over time, turning experimentation into infrastructure rather than effort.

Practical use cases for predictive modeling in retention

- Onboarding acceleration: Predict which trial users are likely to convert early based on engagement with premium features. Deliver targeted prompts or in-app tours to shorten time-to-value.

- Downgrade prevention: Use churn probability models to surface subscribers reducing usage or visiting downgrade pages. Offer plan flexibility or highlight underused features before cancellation occurs.

- Dormant reactivation: Classify dormant users by churn reason, price sensitivity, fatigue, or competitor switching, and trigger the right reactivation message for each group.

- Upsell optimization: Identify users whose consumption patterns resemble those of existing premium customers. Offer relevant upgrades backed by behavioral similarity scores.

Cross-functional alignment

Predictive models bridge teams.

- Marketing uses predictions to design experiments and automate campaigns.

- Product teams use insights to detect friction points or feature gaps.

- Finance translates retention lift into projected LTV and revenue forecasts.

This alignment ensures that retention performance becomes a shared responsibility, supported by transparent, data-driven insight.

Common pitfalls and how to avoid them

- Overfitting data: A model that explains the past too perfectly may fail in the future. Keep validation sets separate and update models frequently.

- Ignoring causality: Correlation is not causation. Predictive modeling should complement experimentation, not replace it. Test assumptions before scaling interventions.

- Lack of human oversight: Automation must have guardrails. Teams should monitor fatigue, frequency, and sentiment to prevent over-messaging high-value subscribers.

Predictive models as the foundation for scalable retention

When predictive models and experimentation work together, retention becomes measurable and repeatable. Every campaign contributes new data that sharpens future predictions, and every prediction guides better experimentation. This is how the feedback loop closes: a continuous cycle of learning, validation, and automation.

In an environment where acquisition costs keep rising, retention is the sustainable growth lever. Predictive models make retention systematic. They allow subscription brands to anticipate churn, personalize interventions, and scale what works; without relying on guesswork or gut instinct.

Lifecycle marketing no longer runs on static campaigns but on learning systems that adapt as subscriber behavior evolves. Predictive modeling is the intelligence layer that makes this evolution possible.

Final thoughts

Retention experimentation is most powerful when every result feeds the next decision. Predictive models transform that principle into a process. They give commercial teams the foresight to act, the evidence to trust their actions, and the automation to scale them efficiently.

If you are building a modern retention engine, start by closing the loop, connect prediction, experimentation, and automation into one system. Subsets, a lifecycle experimentation and automation platform, enables this with zero help from engineering teams.

Designed for consumer subscription businesses, it helps teams identify predictive audiences, run structured retention experiments, and promote winning results into always-on journeys that drive measurable improvements in engagement, retention, and lifetime value.

If you are planning on supercharging your retention experimentation game, talk to our team today!

.svg)

.svg)

.webp)

.webp)